How to Load and Transform into BigQuery Wildcard Tables

· 5 min read

BigQuery Wildcard Tables

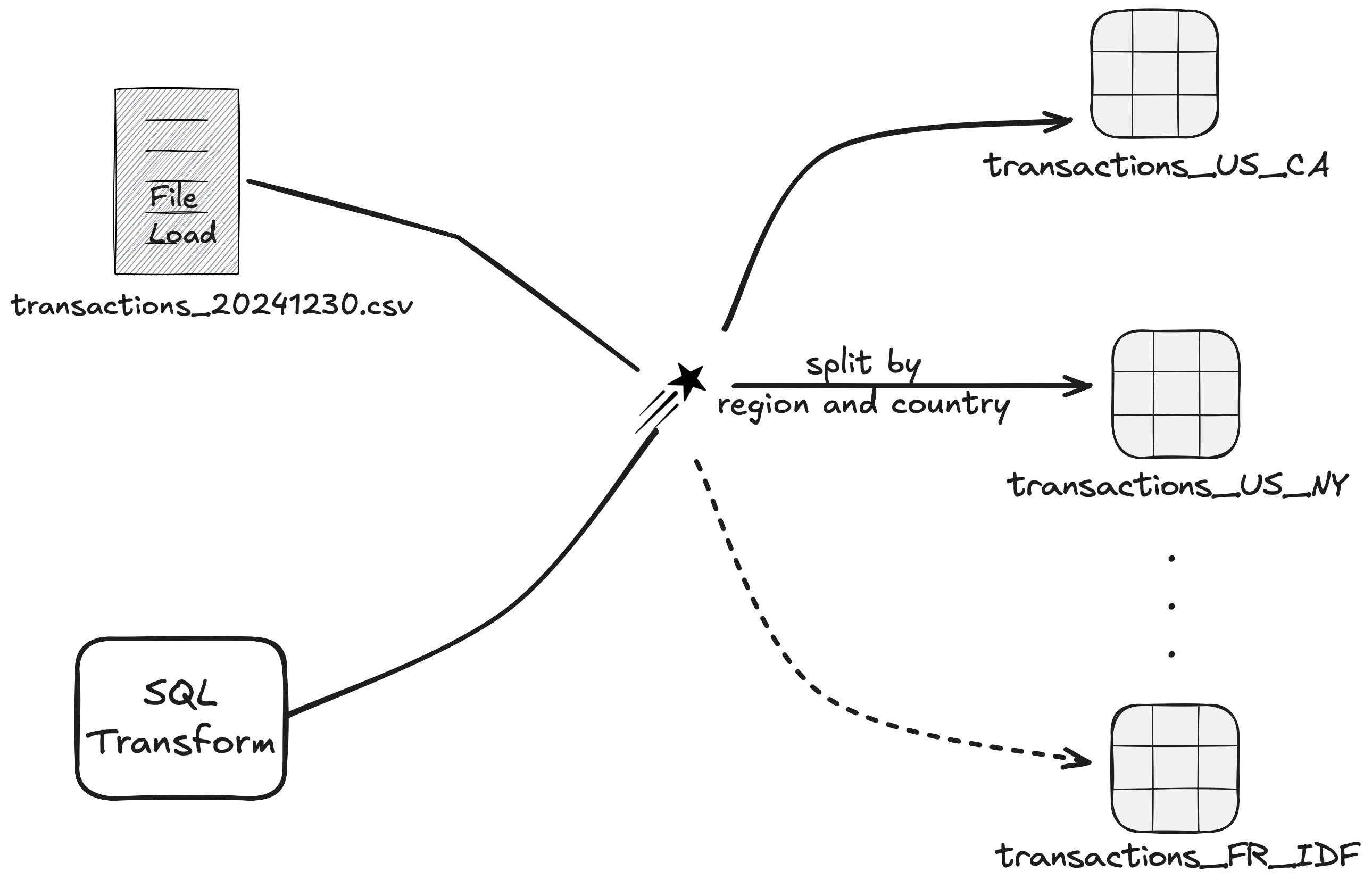

When loading files into BigQuery, you may need to split your data into multiple partitions to reduce data size, improve query performance, and lower costs. However, BigQuery’s native partitioning only supports columns with date/time or integer values. While partitioning on string columns isn’t directly supported, BigQuery provides a workaround with wildcard tables, offering nearly identical benefits.

In this example, we demonstrate how Starlake simplifies the process by seamlessly loading your data into wildcard tables.