Incremental models, the easy way.

Incremental models, the easy way.

One of the key advantages of Starlake is its ability to handle incremental models without requiring state management. This is a significant benefit of it being an integrated declarative data stack. Not only does it use the same YAML DSL for both loading and transforming activities, but it also leverages the backfill capabilities of your target orchestrator.

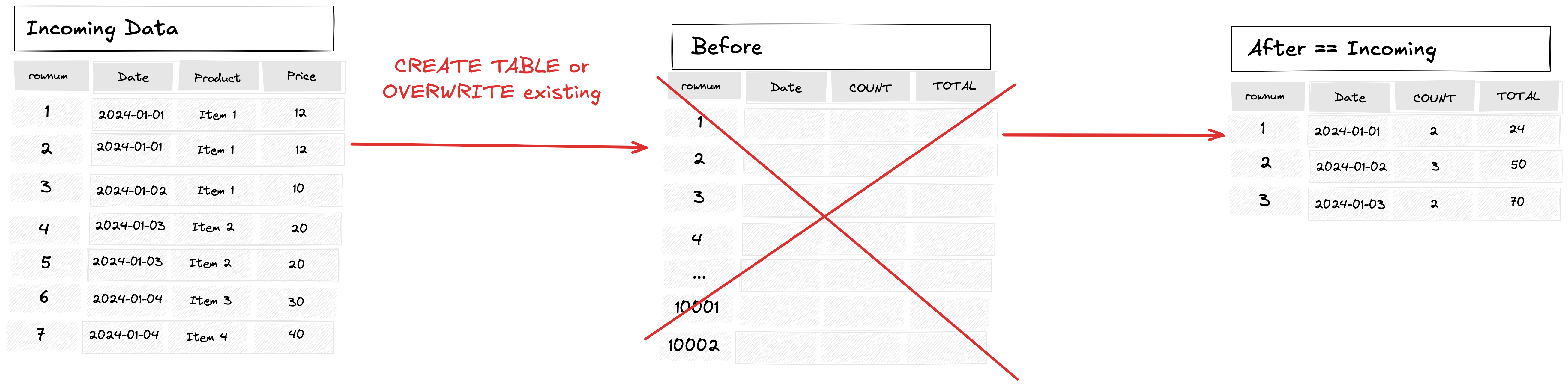

When running transformations, we encounter two cases:

Full-refresh

The existing data is overwritten or a new table is created if it did not exist before

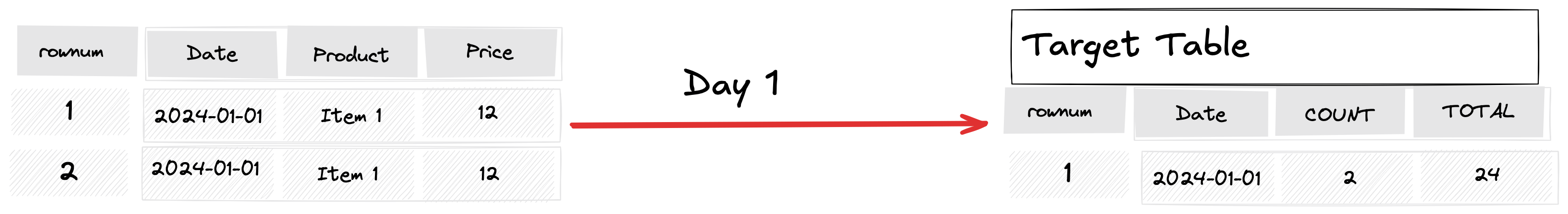

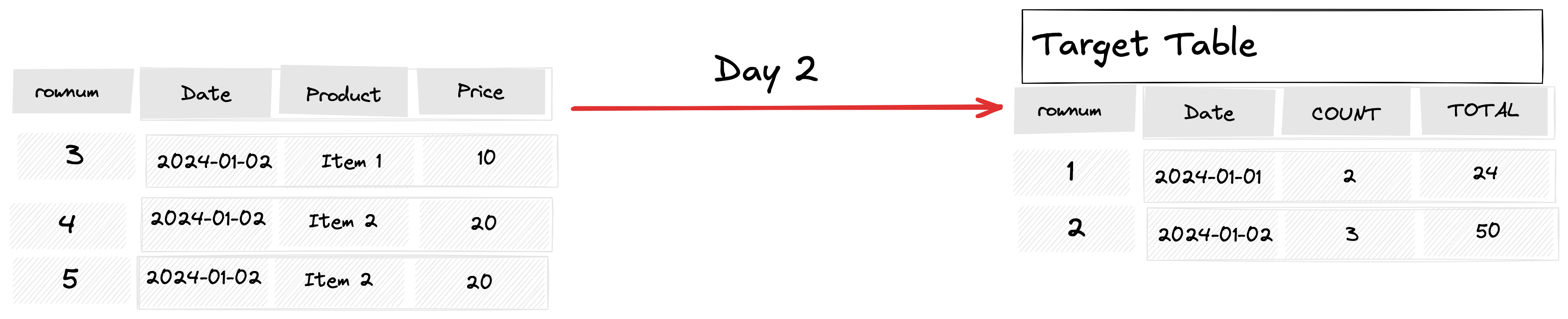

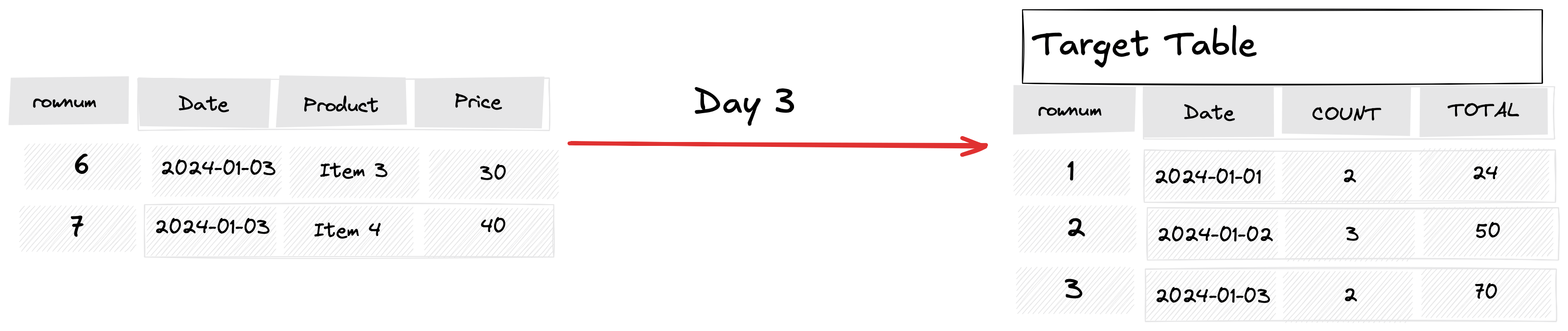

Incremental

As data arrives periodically we have to build KPIs on that new data only. For example, the daily_sales KPI does not need the whole input dataset but just the data for the day we are computing the KPI. The computed daily KPI are then appended to the existing dataset, greatly reducing the amount of data processed.

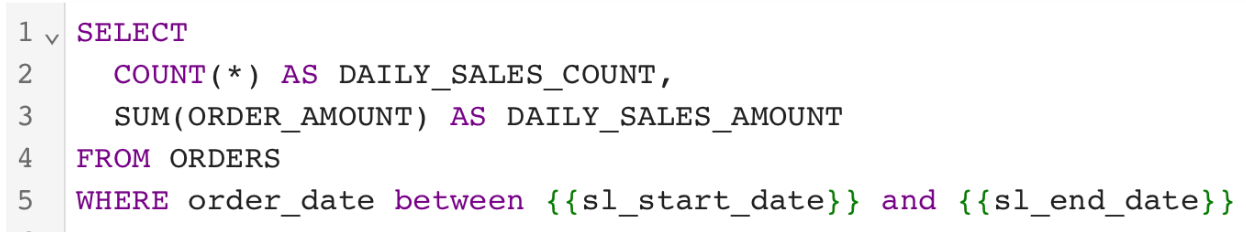

Starlake allows you to write your query as follows:

The sl_start_date and sl_end_date variables represent the start and end of the day for

which the query is being executed. These are reserved environment variables that are automatically

passed to the transformation process by the orchestrator (e.g., Airflow or Dagster).

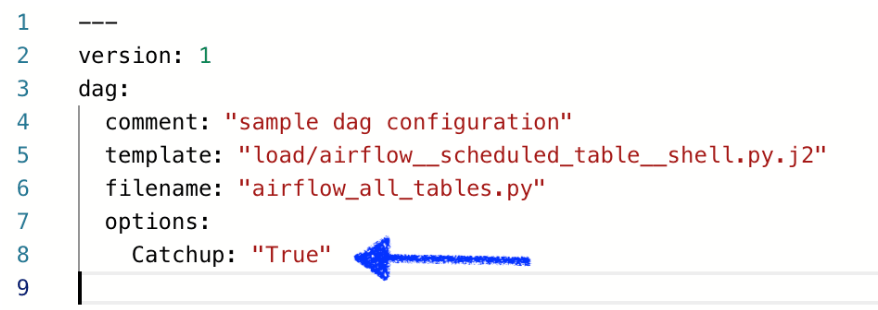

You might wonder what happens if the job fails to run for a specific day.

There's no need to worry—Starlake handles this seamlessly by leveraging Airflow's

Catchup mechanism. By configuration, Starlake requests the orchestrator

to catch up on any missed intervals. To enable this, simply add the catchup flag

to your YAML DAG definition in Starlake, and as expected the orchestrator will run the missed intervals using

the sl_start_date and sl_end_date variables valued accordingly.

What if you want to backfill a large period of time ? Your orchestrator has it all too:

Conclusion

In conclusion, Starlake's integrated and declarative approach simplifies the management of incremental and full-refresh transformations, eliminating the need for complex state management.

By leveraging reserved environment variables like sl_start_date and sl_end_date,

Starlake seamlessly integrates with orchestrators such as Airflow and Dagster to handle backfills,

missed intervals, and large periods of historical data with ease.

This ensures responsibility segregation between Starlake and the orchestrator:

Starlake's design empowers teams to focus on writing queries and delivering insights,

while it takes care of orchestration and state management effortlessly.